IBM pushes qubit rely over 400 with new processor

[ad_1]

Immediately, IBM introduced the most recent technology of its household of avian-themed quantum processors, the Osprey. With greater than thrice the qubit rely of its previous-generation Eagle processor, Osprey is the primary to supply greater than 400 qubits, which signifies the corporate stays on monitor to launch the primary 1,000-qubit processor subsequent 12 months.

Regardless of the excessive qubit rely, there is no must rush out and re-encrypt all of your delicate knowledge simply but. Whereas the error charges of IBM’s qubits have steadily improved, they’ve nonetheless not reached the purpose the place all 433 qubits in Osprey can be utilized in a single algorithm and not using a very excessive likelihood of an error. For now, IBM is emphasizing that Osprey is a sign that the corporate can persist with its aggressive highway map for quantum computing, and that the work wanted to make it helpful is in progress.

On the highway

To grasp IBM’s announcement, it helps to grasp the quantum computing market as an entire. There are actually quite a lot of corporations within the quantum computing market, from startups to giant, established corporations like IBM, Google, and Intel. They’ve wager on quite a lot of applied sciences, from trapped atoms to spare electrons to superconducting loops. Just about all of them agree that to succeed in quantum computing’s full potential, we have to get to the place qubit counts are within the tens of 1000’s, and error charges on every particular person qubit are low sufficient that these might be linked collectively right into a smaller variety of error-correcting qubits.

There’s additionally a common consensus that quantum computing might be helpful for some particular issues a lot sooner. If qubit counts are sufficiently excessive and error charges get low sufficient, it is potential that re-running particular calculations sufficient occasions to keep away from an error will nonetheless get solutions to issues which might be tough or inconceivable to attain on typical computer systems.

The query is what to do whereas we’re working to get the error charge down. For the reason that likelihood of errors largely scales with qubit counts, including extra qubits to a calculation will increase the chance that calculations will fail. I’ve had one govt at a trapped-ion qubit firm inform me that it could be trivial for them to lure extra ions and have a better qubit rely, however they do not see the purpose—the rise in errors would make it tough to finish any calculations. Or, to place it otherwise, to have likelihood of getting a consequence from a calculation, you’d have to make use of fewer qubits than can be found.

Osprey would not basically change any of that. Whereas the particular person at IBM did not immediately acknowledge it (and we requested—twice), it is unlikely that any single calculation may use all 433 qubits with out encountering an error. However, as Jerry Chow, director of Infrastructure with IBM’s quantum group, defined, elevating qubit counts is only one department of the corporate’s growth course of. Releasing the outcomes of that course of as a part of a long-term highway map is essential due to the alerts it sends to builders and potential end-users of quantum computing.

On the map

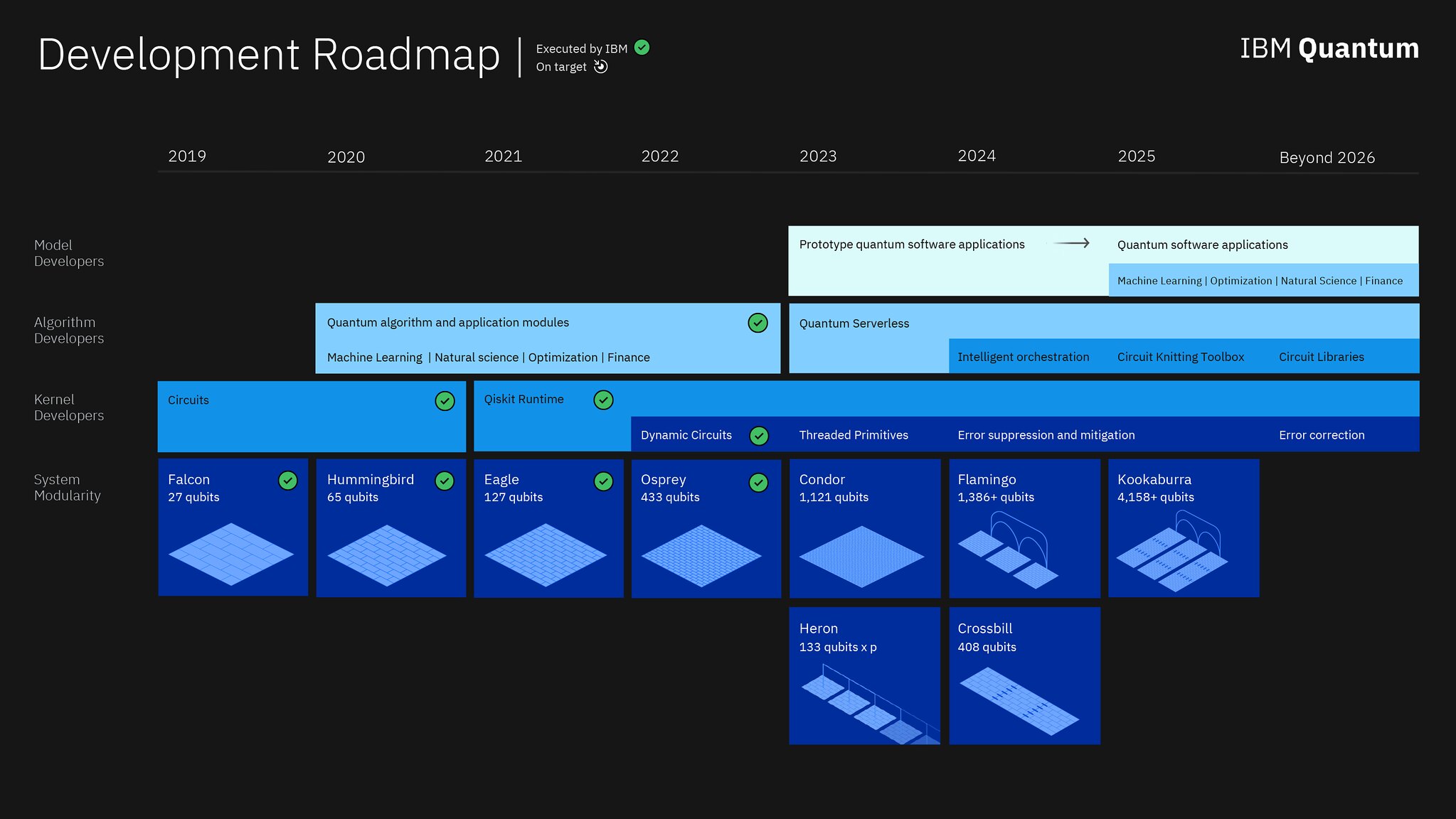

IBM launched its highway map in 2020, and it referred to as for final 12 months’s Eagle processor to be the primary with greater than 100 qubits, bought Osprey’s qubit rely proper, and indicated that the corporate can be the primary to clear 1,000 qubits with subsequent 12 months’s Condor. This 12 months’s iteration on the highway map extends the timeline and supplies quite a lot of further particulars on what the corporate is doing past elevating qubit counts.

IBM’s present quantum highway map is extra elaborate than its preliminary providing.

Probably the most notable addition is that Condor will not be the one {hardware} launched subsequent 12 months; a further processor referred to as Heron is on the map that has a decrease qubit rely, however has the potential to be linked with different processors to type a multi-chip bundle (a step that one competitor within the house has already taken). When requested what the most important barrier to scaling qubit rely was, Chow answered that “it’s measurement of the particular chip. Superconducting qubits aren’t the smallest buildings—they’re truly fairly seen to your eye.” Becoming extra of them onto a single chip creates challenges for the fabric construction of the chip, in addition to the management and readout connections that must be routed inside it.

“We expect that we’re going to flip this crank another time, utilizing this primary single chip sort of know-how with Condor,” Chow instructed Ars. “However truthfully, it is impractical should you begin to make single chips which might be in all probability a big proportion of a wafer measurement.” So, whereas Heron will begin out as a facet department of the event course of, all of the chips past Condor may have the aptitude to type hyperlinks with further processors.

Source link